cudaGraph

1 前言

根据官方文档的描述,解释一下什么是cudaGraph,其次为什么要使用cudaGraph。

1.1 什么是cudaGraph

官方的描述如下

1 | CUDA Graphs present a new model for work submission in CUDA. A graph is a series of operations, such as kernel launches, connected by dependencies, which is defined separately from its execution. This allows a graph to be defined once and then launched repeatedly. |

翻译过来就是

1 | CUDA 图形为 CUDA 中的工作提交提供了一种新模式。图形是一系列操作(如内核启动),由依赖关系连接,与执行分开定义。这使得图形可以一次性定义,然后重复启动 |

自己的理解:

没有Graph:CPU端每次调用一个kernel 的Launch,多个Kernel就多次Launch。

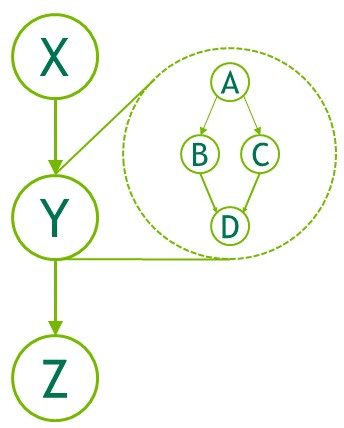

将多个kernel录制为一个Graph,一次Launch Graph操作就可以支持多个kernel。然后在这一个图中进行运算。如下图,Y就是一个Graph,其中包含了多个kernel A、kernel B、kernel C、kernelD。但是我们之需要执行Y就相当与执行了ABCD。

1.2 为什么要使用Graph

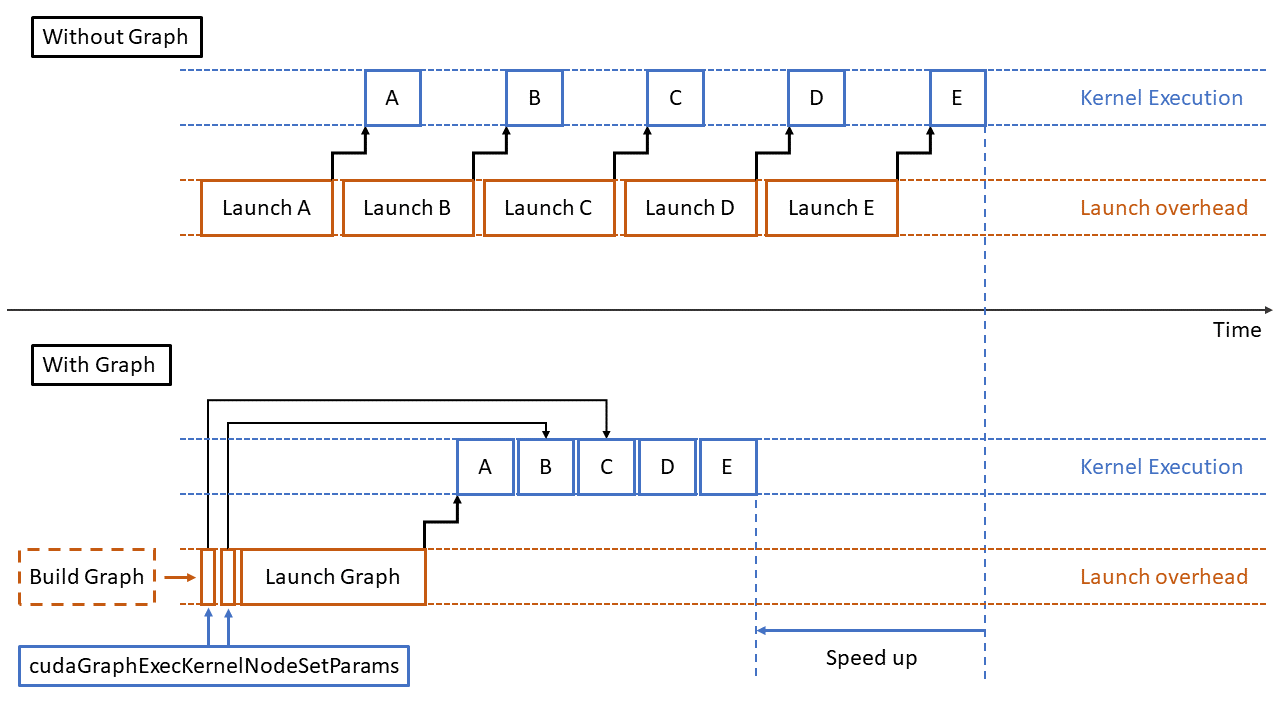

先通俗的理解一下,在我们的实际工程中在一个Stream中会调用多个cuda的kernel,按照Lanuch进入Stream的顺序在一个Stream中执行,每一次在CPU上调用kernel都会有一次Lanuch的操作,如果我们的kernel很多并且Lanuch的时间比较长,实际执行kernel的时间比较短,那么每一次Kernel的Lanuch操作的时间就不可忽略成为影响我们程序性能的重要因素了,描述的可能不直观,来一张官方介绍的图就明白了

要实现上图的Graph加速实际上需要一些前提,我下面的测试实际上并没有体现Graph的加速。这些前提就是下面的官方描述

1 | eparating out the definition of a graph from its execution enables a number of optimizations: first, CPU launch costs are reduced compared to streams, because much of the setup is done in advance; second, presenting the whole workflow to CUDA enables optimizations which might not be possible with the piecewise work submission mechanism of streams. |

翻译过来就是

1 | 将图形的定义与执行分离开来可以实现一系列优化:首先,与流相比,CPU 的启动成本降低了,因为大部分设置都是提前完成的;其次,将整个工作流程呈现给 CUDA 可以实现优化,而流的分片工作提交机制可能无法实现这些优化。 |

我总结的使用Graph的有点如下:

- 也就是单独Lanuch没一个kernel的操作时间比较长,kernel的执行执行时间短。如果构建为一个Graph之后多个kernel的Launch就可以合并为一次Launch进而实现优化

- 因为kernel的Launch少了,因此CPU的负载也会降低

- 其他的cuda内部优化,不得而知。

2 创建并使用Graph

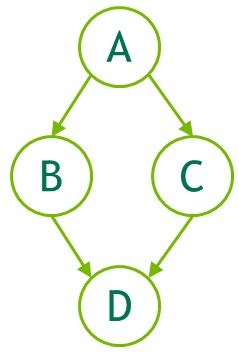

Graph可以通过两种机制创建:显式API和流捕获。以下是创建和执行下面图表的示例。

2.1 使用API创建Graph

1 | // Create the graph - it starts out empty |

2.2 使用Stream捕获创建Graph

流捕获提供了一种机制,可从现有的基于流的应用程序接口创建图形。在调用 cudaStreamBeginCapture() 和 cudaStreamEndCapture()时,可括弧括住向流(包括现有代码)启动工作的代码部分

具体参考3.2.8.7.3. Creating a Graph Using Stream Capture

1 | cudaGraph_t graph; |

2.3 测试例子

因为我的测试kernel都比较简单,所以kernel的Launch都比较短,看不出使用Graph的优势,但是还是可以作为一个使用的例子来参考一下的。

测试代码如下:

1 |

|

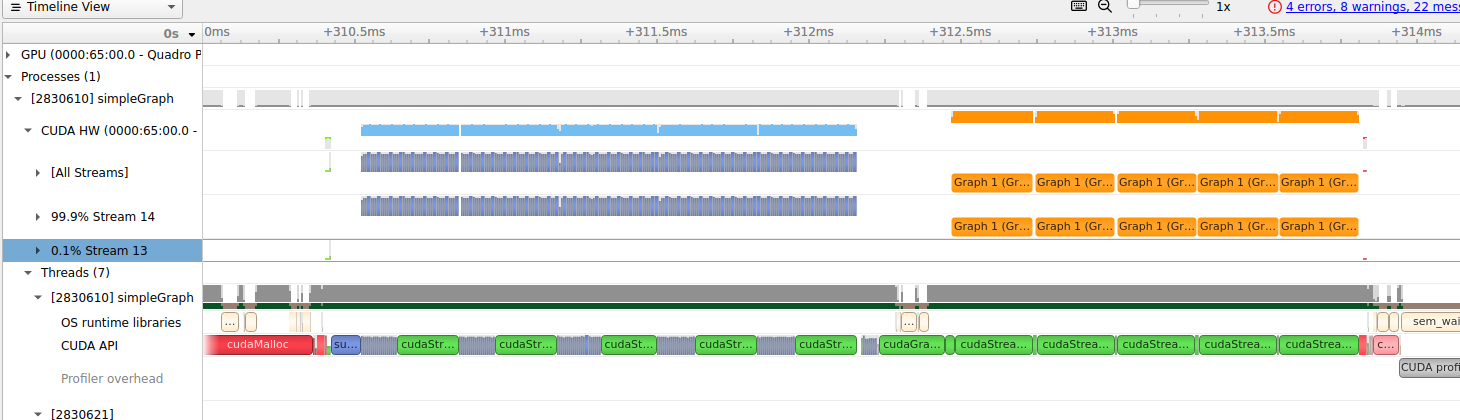

测试结果如下(一次运行40个kernel,运行5次),前面的蓝色是没有使用Graph的情况,后面的橙色是使用Graph的情况(一个Graph内是40个kernel)

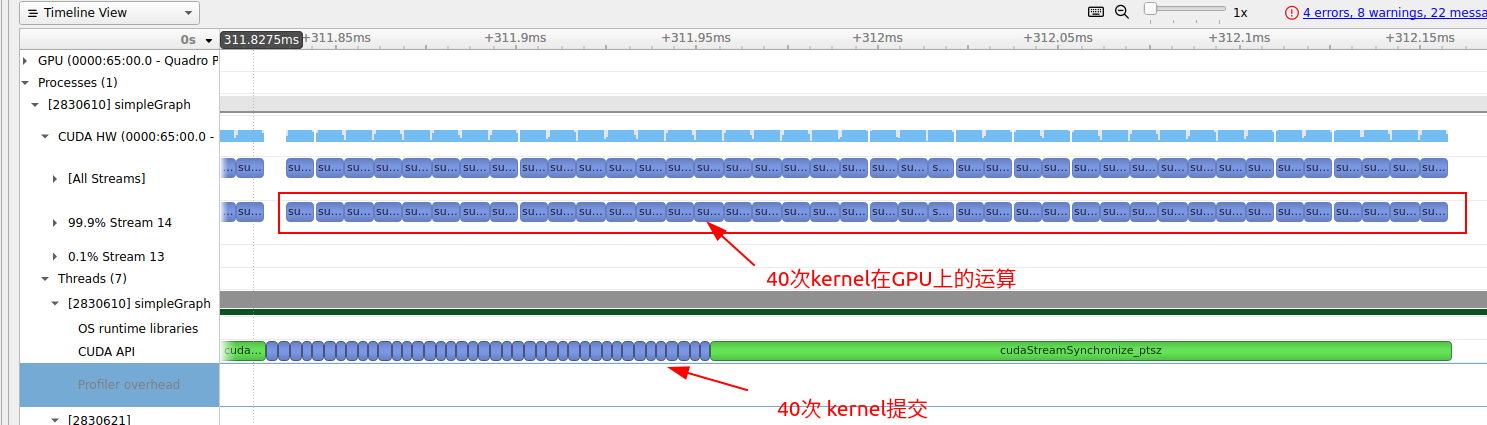

先看一下没有使用Graph的时候,如下图是单独一次的运行(总共运行5次,这是其中的一次),可以看出有40次kernel的Launch。

再看一下使用Graph的情况,如下图是单独一次的运行(总共运行5次,这是其中的一次),可以看出之调用一次GraphLaunch。(总共调用5次)

我这里使用Graph并没有减少总的时间,主要是因为我测的是kernel比较小,kernel的Launch比较快。实际的工程中Kernel可能比较慢,

kernel的launch会比较大,主要有以下几种情况:

- kernel的大小比较大:kernel的大小越大,launch的开销就越大。

- kernel的参数比较多:kernel的参数越多,launch的开销就越大。

- kernel的调用频率比较高:kernel的调用频率越高,launch的开销就越大。