5-2 共享内存的数据布局

5.2 共享内存的数据布局

这一节主要是介绍了共享内存的矩阵数组访问模式,按行和按列访问还有内存的填充,说白了就是如何索引到数据。

- 行主序与列主序访问

- 静态与动态共享内存的声明

- 文件范围与内核范围的共享内存

- 内存填充与无内存填充

当使用共享内存设计核函数时,重点应放在以下两个概念上:

- 跨内存存储体映射数据元素

- 从线程索引到共享内存偏移的映射

目的是当这些概念了然于心时,就可以设计一个高效的核函数了,它可以避免存储体冲突,并充分利用共享内存的优势。

5.2.1方形共享内存

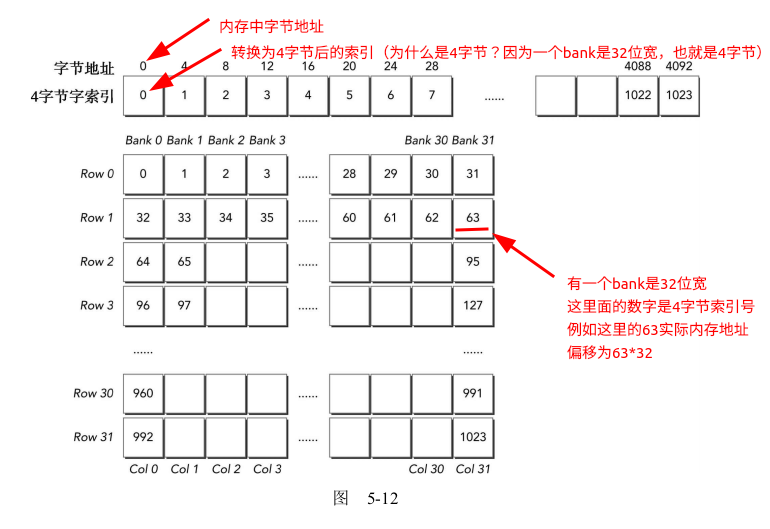

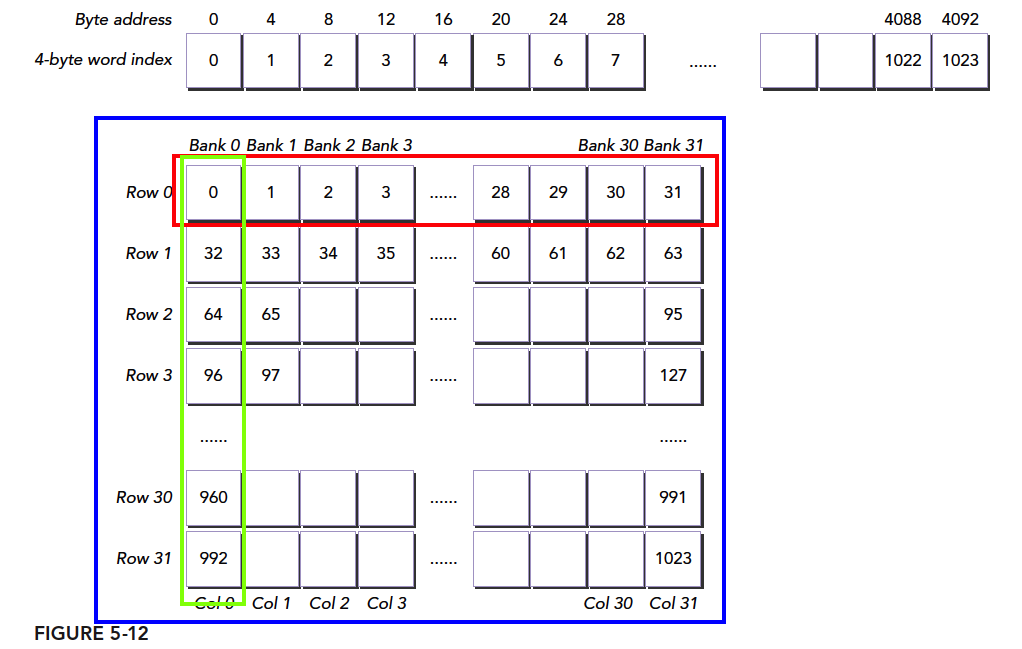

图5-12显示了一个共享内存块,它在每个维度有32个元素,且按行主序进行存储。上部的图显示了一维数据布局的实际排列,下部的图显示了带有4字节数据元素和存储体映射的二维共享内存逻辑视图。使用下面的语句静态声明一个二维共享内存变量:

1 |

|

如果访问上面申请的tile共享内存,可以有两种方式索引

1 | tile[threadIdx.y][threadIdx.x]; |

上一节提到在同一个线程束中若有访问独立存储体的线程,则它是最优的

我们的共享内存变量类型是int(4字节)。一个元素占一个block的宽度。因此使用

1 | tile[threadIdx.y][threadIdx.x]; |

上面的索引方式就可以取出共享内存中的行(例如第一行就是红色框部分),无冲突,一个事务完成。

使用

1 | tile[threadIdx.x][threadIdx.y]; |

就可以索引到列。上图的绿色部分,冲突达到了最大,效率最低。

5.2.1.1 行主序访问和列主序访问

考虑一个核函数有两个简单操作:

- 将全局线程索引按行主序写入到一个二维共享内存数组中

- 从共享内存中按行主序读取这些值并将它们存储到全局内存中

核函数如下

1 |

|

- 其中

tile[threadIdx.y][threadIdx.x] = idx;将索引按照行主序顺序写入共享内存块。共享内存的存储操作 __syncthreads();- 同步点(使用syncthreads函数),所有线程必须将存储的数据送到共享内存块中,才进行下一步的操作

out[idx] = tile[threadIdx.y][threadIdx.x]按行主序从共享内存给全局内存赋值。包含两个内存操作:共享内存的加载操作和全局内存的存储操作- 因为相同线程束中的线程有连续的threadIdx.x值,用threadIdx.x索引共享内存数组tile的最内层维度,所以核函数无存储体冲突。

修改上面的核函数,交换两个[threadIdx.y][threadIdx.x]为[threadIdx.x][threadIdx.y]。那么线程束就会按照列主序访问。修改如下

1 | __global__ void setColReadCol (int *out) |

- 共享内存的写入和读取都是按列主序。

两个核函数的运行测试如下

1 | huolin@huolin:~/$ sudo nvprof ./checkSmemSquare |

我们上面两个典型的例子结果如下

1 | 13.35% 4.2560us 1 4.2560us 4.2560us 4.2560us setColReadCol(int*) |

使用下面的命令查看存储体的加载和存储冲突

shared_load_transactions_per_requestshared_store_transactions_per_request

1 | sudo nvprof --metrics shared_load_transactions_per_request,shared_store_transactions_per_request ./checkSmemSquare |

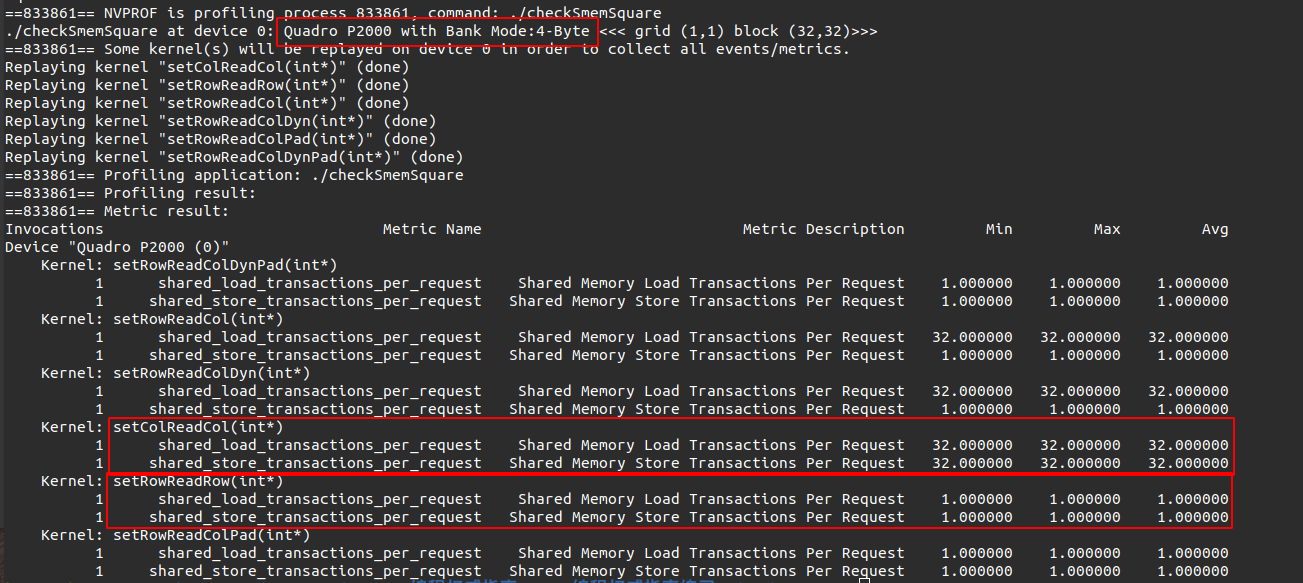

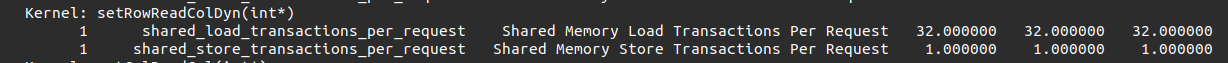

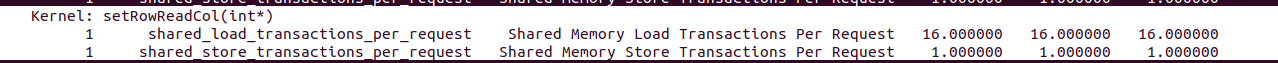

结果如下:

行主序setRowReadRow的加载和存储事务都是1,而列主序setColReadCol的加载和存储事务都是32。注意,我们这个设备是4-byte宽的,上图中Quadro P2000 with Bank Mode:4-Byte显示的有。

完整的程序如下:

1 |

|

5.2.1.2 按行主序写和按列主序读

上一节的两个例子

setRowReadRow对共享内存的操作是按行写,行读,如下

1 | // shared memory store operation |

setColReadCol对共享内存的操作是按列写,列读,如下

1 | // shared memory store operation |

那么还有情况就是行写,列读,setRowReadCol函数如下:

1 | __global__ void setRowReadCol(int *out) |

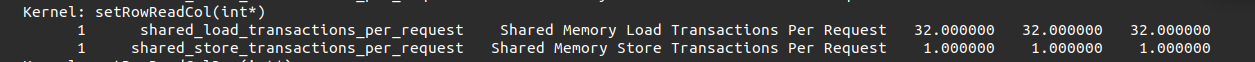

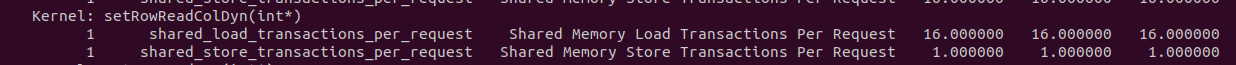

测试结果再上面小节已经有了。容易分析出 共享内存 的存储事务是1,加载事务是32

5.2.1.3 动态共享内存

也可使用动态声明共享内存,从而实现相同的核函数。可以在核函数外声明动态共享内存,使它的作用域为整个文件,也可以在核函数内声明动态共享内存,将其作用域限制在该内核之中。动态共享内存必须被声明为一个未定大小的一维数组。

如上一节描述

在某个核函数的内部或所有核函数的外部进行

1 | extern __shared__ int tile[]; |

因为这个数组的大小在编译时是未知的,所以在每个核函数被调用时,需要动态分配共享内存,将所需的大小按字节数作为三重括号内的第三个参数

1 | kernel<<<grid,block,isize*sizeof(int)>>>(...) |

修改一下内存的索引就可以得到上面相同setRowReadCol功能相同的函数

1 | __global__ void setRowReadColDyn(int *out) |

测试结果和上一节一致

5.2.1.4 填充静态声明的共享内存

这一节使用填充的方法来避免存储体冲突。

填充静态声明的共享内存很简单。只需简单地将一列添加到二维共享内存分配中,代码如下所示:其他都不变

1 | __shared__ int tile[BDIMY][BDIMX + IPAD]; |

核函数如下:

1 | __global__ void setRowReadColPad(int *out) |

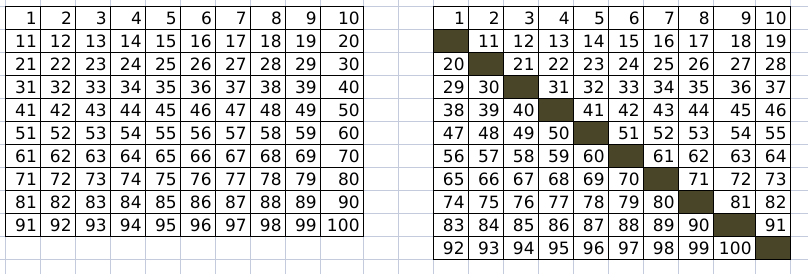

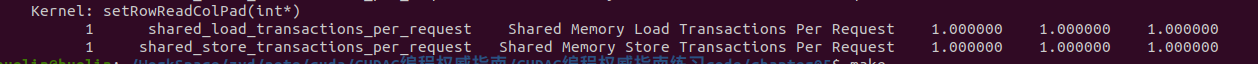

测试结果如下:没有冲突了。

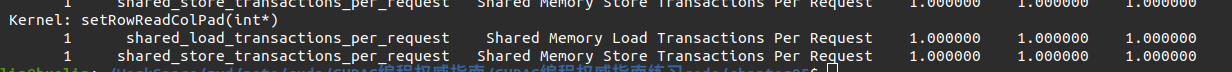

分析:上一节提到,增加一列共享内存就可以做到填充,但是增加的一列不存储任何数据。就上上面我们申请的共享内存是tile[32][33];但是block的数量依然是32。将二维的内存转换为一维的线性内存地址空间更加好理解。因为block的数量还是32(我们的tile数据类型是4字节的int,一个元素占一个block),因此除了第一行,剩下的行元素的索引位置会有1的偏移量。

如下图所示,假如block是10,我们的共享内存是10 * 10大小。block内存储的就是编号数据。

左侧:没有填充 共享内存大小10 * 10。

右侧:扩展一列共享内存大小10 * 11 。数据在block内的存储情况。一定要注意block依然是10 。黑色的块是扩展的,不存储数据,只是为了改变在block内的索引。按一维展开后更好理解。

5.2.1.5 填充动态声明的共享内存

动态的填充就是考虑计算索引麻烦点,如下:

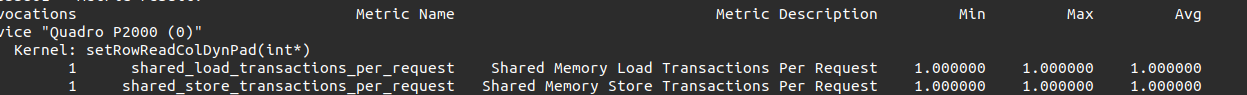

1 | __global__ void setRowReadColDynPad(int *out) |

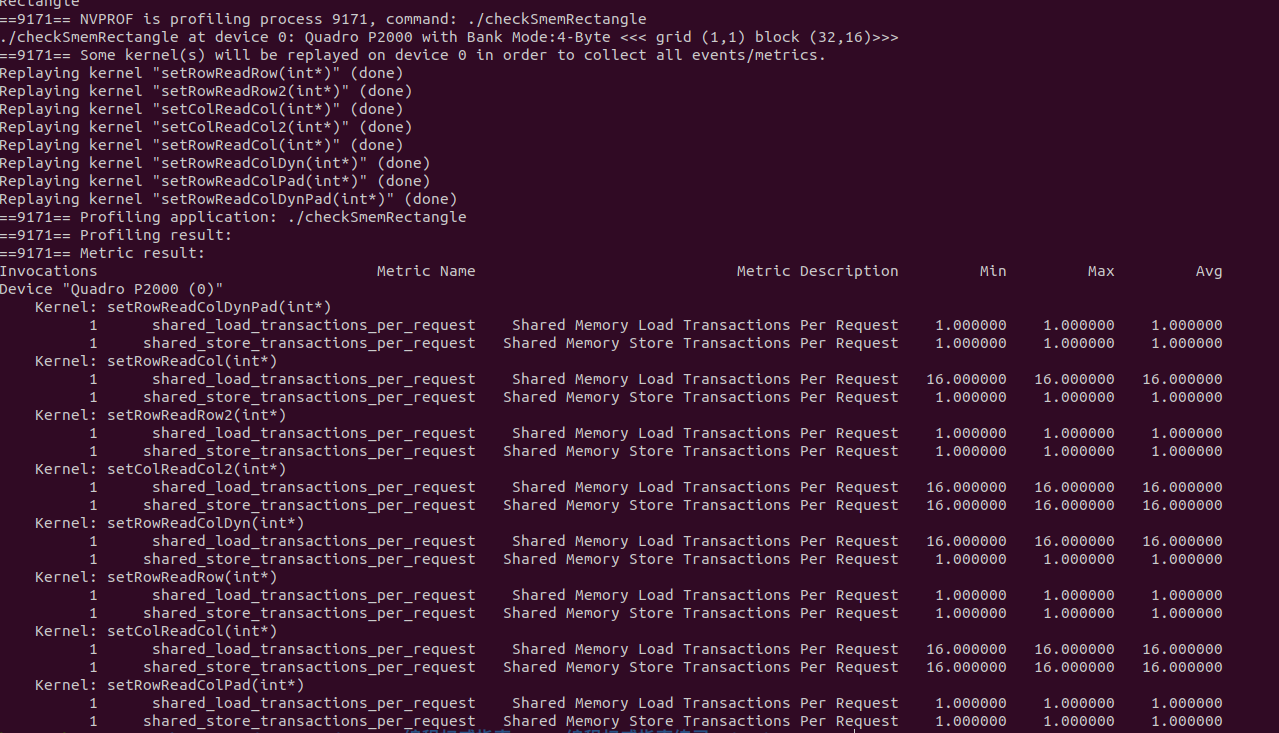

5.2.1.6 方形共享内存内核性能的比较

到目前为止,从所有执行过的内核运行时间可以看出:

- 使用填充的内核可提高性能,因为它减少了存储体冲突

- 带有动态声明共享内存的内核增加了少量的消耗

如果读和写操作使用不同的顺序(例如,读操作使用行主序,而写操作使用列主序),那么核函数会产生转置矩阵。这些简单的核函数为更复杂的转置算法奠定了基础。

5.2.2 矩形共享内存

如果申请的共享内存不是正方形的,需要计算一下索引。

1 |

|

5.2.2.1 行主序访问与列主序访问

注意下面两个函数申请共享内存的维度不同

tile[threadIdx.y][threadIdx.x]外层的维度是threadIdx.x也就是按行

tile[threadIdx.x][threadIdx.y]外层的维度是threadIdx.y也就是按列

1 | __global__ void setRowReadRow(int *out) |

5.2.2.2 行主序写操作和列主序读操作

为了实现矩形矩阵的转置,可以列读行写,如下

同样的如果是setColReadCol函数如下

1 | __global__ void setRowReadCol(int *out) |

- 需要转置,因此申请的共享内存为

tile[BDIMY][BDIMX] unsigned int idx = threadIdx.y * blockDim.x + threadIdx.x;将当前线程的二维线程索

引转换为一维全局线程IDirow = idx / blockDim.y和icol = idx % blockDim.y计算出行和列的索引tile[threadIdx.y][threadIdx.x] = idx共享内存按行存储(行写)。因为外层维度是threadIdx.x。写操作期间没有存储体冲突。out[idx] = tile[icol][irow]共享内存按列读取,因为icol是按照threadIdx.x线性变化的。读操作有存储体冲突16

结果如下

5.2.2.3 动态声明的共享内存

上面的例子使用动态共享内存代码如下

1 | CHECK(cudaMemset(d_C, 0, nBytes)); |

结果和静态的没有区别

5.2.2.4 填充静态声明的共享内存

和方形共享内存操作一致,下面使用填充来减少存储体冲突

1 | __global__ void setRowReadColPad(int *out) |

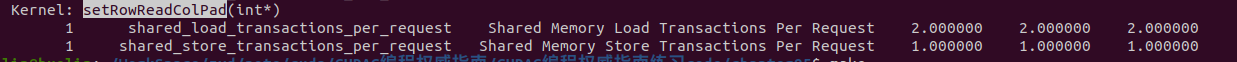

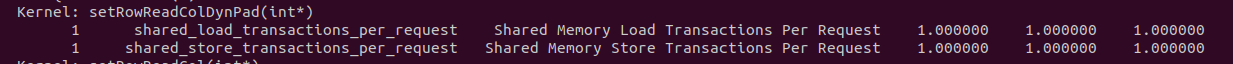

当IPAD=2时结果为

当IPAD=1时结果为

所以具体的IPAD需要根据自己的核函数block大小调整。

5.2.2.5 填充动态声明的共享内存

下面是动态的共享内存填充实现,需要注意的就是索引了。

1 | __global__ void setRowReadColDynPad(int *out) |

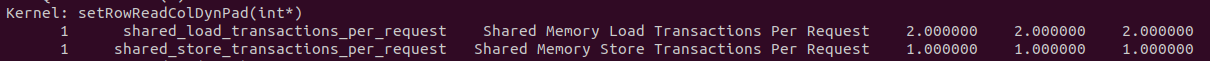

当IPAD=2时结果为

当IPAD=1时结果为

所以具体的IPAD需要根据自己的核函数block大小调整。

5.2.2.6 矩形共享内存内核性能的比较

整体的对比如下

源码如下:

1 |

|