CPU与GPU内存

简介

这一节主要讲一下我们使用CPU与GPU交互的CPU内存的申请使用和具体的区别。测试平台为orin

参考https://docs.nvidia.com/cuda/cuda-for-tegra-appnote/index.html#memory-management

基础知识

这里主要时CPU内存的相关问题,主要分为

- pageable mem ,例如使用malloc申请的CPU内存

- pinned mem ,例如使用cudamallocHost或者使用cudaHostAlloc申请的CPU内存

在涉及H2D和D2H的内存拷贝时,我们都需要使CPU的mem为 pinned mem 保障拷贝速度最快。

下面使用矩阵加法来演示几种内存。

pageable mem

1 |

|

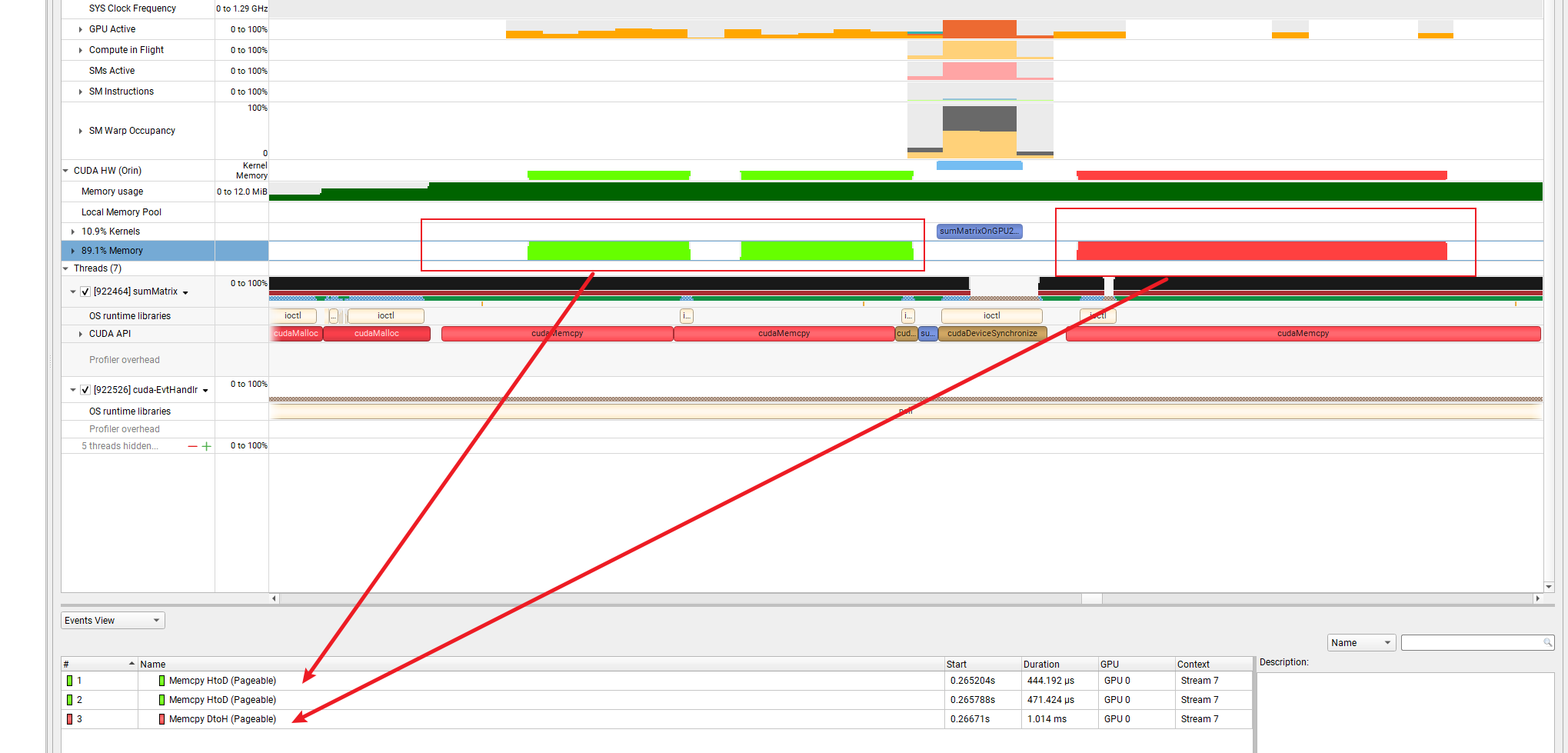

分析nsys如下,可以看出kernel前后都有拷贝,同时是paegable mem。这是最差的情况。一个绿色部分的内存拷贝时间为442us

pinned mem

使用cudaHostAlloc或者cudamallocHost申请的CPU内存。

1 |

|

对应的nsys如下,一次内存拷贝时间为127ms

pinned mem但是不拷贝

因为我是用的是orin设备,实际上CPU和GPU使用的同一块内存,因此不需要拷贝到GPU,申请的CPU的内存指针可以直接给到GPU的kernel使用。

有一种情况这种使用不合适

- 当一个小的数据经过kernel频繁的访问计算,然后传递出kernel,,这个时候使用cudamalloc的GPU内存是最优的,不要使用cudaHostAlloc或者cudamallocHost申请这一部分GPU内存,因为cudamalloc申请的GPU内存会有GPU 的L2 cache 可以快速访问,但是cudaHostAlloc或者cudamallocHost的GPU内存只能从global mem访问,太慢了。

备注:需要cuda版本也足够,具体从哪一个版本支持需要查看一下NV统一内存的相关信息,因为我的cuda版本满足,也就没多深入。

1 |

|

cudaHostRegister

这里再测试一种情况,如果我们需要使用kernel计算的CPU内存不是我们自己申请的,例如是另外一个进程传递来的,但是是pageable的mem,我么可以使用cudaHostRegister函数将这个内存转换为pinned mem,转换一次即可。

下面代码kernel的输入内存使用了cudaHostRegister处理,但是输出的D2H内存没有处理。可以对比一下

1 |

|

cudaHostRegister 不拷贝

还是因为我们是orin设备。是不是使用cudaHostRegister后也可以不拷贝呢?答案是的。

下面是测试程序

1 |

|

统一内存(Unified Memory)

cpu直接申请的GPU就可使用,例如

1 | __global__ void printme(char *str) { |

但是在安全的环境中不建议使用,参考https://docs.nvidia.com/cuda/cuda-for-tegra-appnote/index.html#memory-selection

测试代码

1 |

|

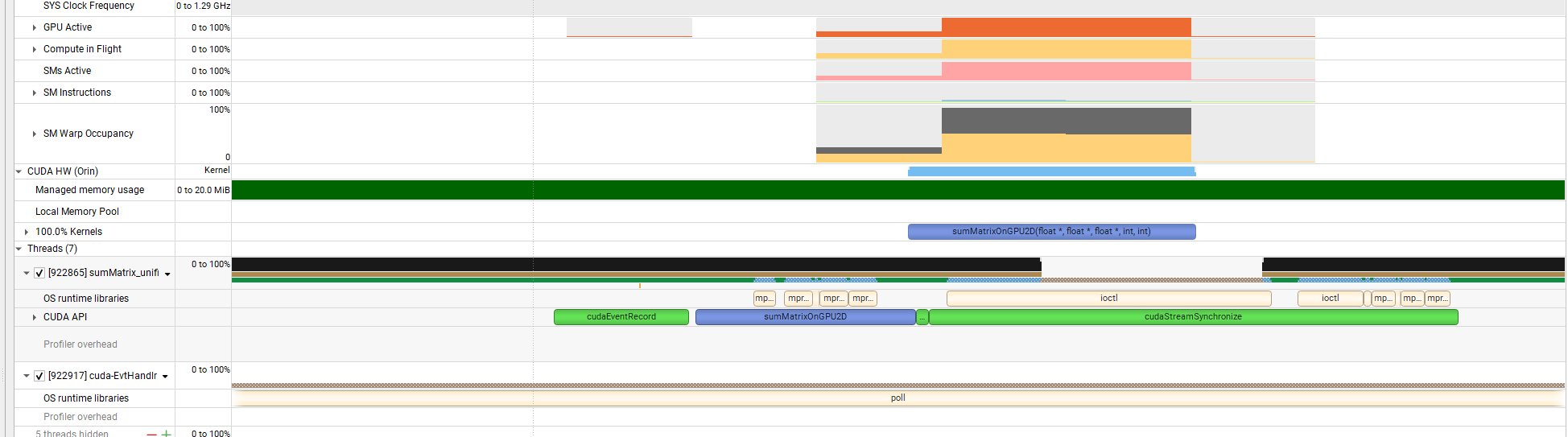

对应的nsys

零拷贝内存 zerocopy mem

使用cudaHostAlloc申请,使用cudaHostGetDevicePointer获取对应的GPU指针。也不需要拷贝

1 |

|

对应的nsys

总结

在orin设备上,首选使用zerocopy mem。

| 内存种类 | 申请方式 | 内存拷贝 | kernel使用的内存指针 | 推荐使用 |

|---|---|---|---|---|

| pageable | malloc+cudaMalloc | cudaMemcpy pageable->Device |

cudaMalloc的内存 | |

| pageable+cudaHostRegister | malloc+cudaHostRegister+cudaMalloc | cudaMemcpy pinned->Device |

cudaMalloc的内存 | 推荐 |

| pageable+cudaHostRegister+no cpy | malloc+cudaHostRegister+cudaHostGetDevicePointer | 无 | cudaHostGetDevicePointer | 推荐 |

| pinned | cudaHostAlloc+cudaMalloc | cudaMemcpy pinned->Device |

cudaMalloc的内存 | 推荐 |

| pinned+no cpy | cudaHostAlloc | 无 | cudaHostAlloc的内存 | 推荐 |

| Unified | cudaMallocManaged | 无 | cudaMallocManaged的内存 | |

| zero copy mem | cudaHostAlloc+cudaHostGetDevicePointer | 无 | cudaHostGetDevicePointer | 首选 |